AI is already changing research and product development at Philly-based NextFab

NextFab has been using this technology, which “learns” and accelerates with repeated use, to speed web research and design new products, processes, logos, legal contracts, and more.

Tech developers, tinkerers, device engineers, artists, and start-up founders are experimenting with ChatGPT and other generative artificial intelligence platforms — including at NextFab, a Philadelphia firm that offers digital hardware and software tools, know-how, and venture funding to fee-paying users.

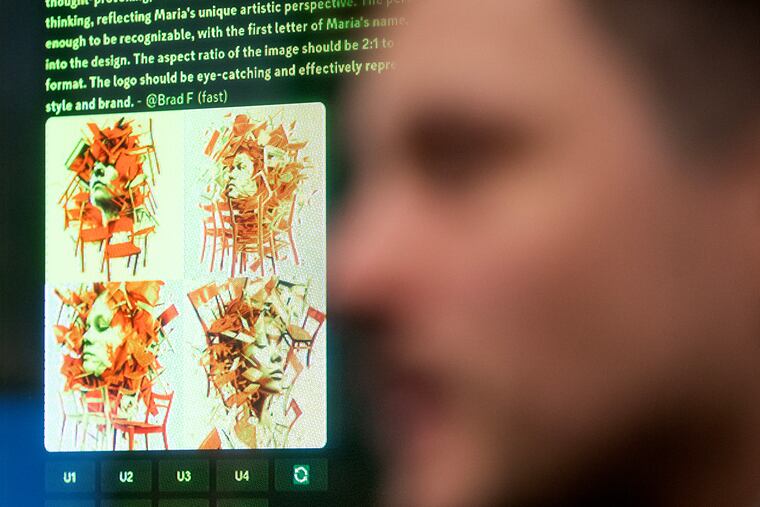

At its “makerspaces” on Washington Avenue in Point Breeze and North American Street on the west edge of Kensington, NextFab users, staff, and visitors have been applying this software, which “learns” and accelerates with repeated use to speed web research and design new products, processes, logos, legal contracts, and thought experiments.

Fans say AI platforms promise greater efficiency and opportunity, while challenging established work roles and ideas on the nature and use of intellectual property.

On the eve of a March 22 AI demo gathering at the American Street location, Evan Malone, the Cornell Ph.D. and media heir who started the business at the University City Science Center in 2009, convened four of his favorite Philly AI enthusiasts to explain how AI is already changing design and production.

They were Brad Flaugher, an MIT and Wharton grad turned software company founder who wrote the how-to book AI Harmony; Josh Devon, cofounder of Flashpoint, which makes threat-intelligence software; and a pair of NextFab’s own staff — Drexel-trained senior engineer Matt Starfield, a past Electropunk and heavy-metal musician with Philly’s Mose Giganticus; and IT chief Michael Grusell, who built systems for Citibank. Here’s some of what they told the Inquirer.

This interview has been condensed for brevity and clarity.

Are these ‘generative’ AI platforms just fancy search engines? How are you using this?

Devon: Every company now is an AI company or waiting to be. It’s just as in the 1990s everyone was asking, “Where are you on the web?”

I’ve been working with computational linguists to apply AI [chat panels to validate and assess start-ups in] the venture-building process. We suck down pieces of the internet into the query, then apply ChatGPT questions, like we were a junior analyst team looking at the potential for tech disruption. Imagine [a virtual panel with] Elon Musk, Steve Jobs, Lao Tzu, and Mother Teresa and have them debate things we prototype from economic and moral directions.

Or we’ll take a securities-trading idea and spin it up to look for market signals, then figure out what to try next.

One start-up I was helping, Redcoat AI, just came out of stealth. It’s dealing with this increasing problem, an AI problem, that within seconds of a YouTube video [getting posted online], you can now use AI to make a “chatbox” that can [clone the voice of] the person in the video.

So suddenly we have all these soundalike “CEOs” calling employees and ‘phishing’ them: “Send me $3,000 immediately!” Redcoat can tell you if the calls are real or automated.

Who can use these AI tools?

Starfield: Anyone. I’m an engineer, but I don’t have formal IT training.

Last year that AI stuff started piquing our interest. What lit a fire was Brad’s book, AI Harmony. It’s one big lesson on how to use these applications. Now I’m a passionate evangelist.

Before AI, I’d Google some keywords, read four or five linked articles, and find one that answers the question. Now I type the question into GPT, and it spits out the answer. It saves 40 minutes of research and gives me more keywords and touchpoint to ask the right question.

ChatGPT opened up the world to be able to use different LLMs (large language models). I usually have browsers open to [Anthropic’s] Claude 3, Perplexity, Mistral, Google. I’ll ask them all the same question and see what they are giving us.

You’re still responsible for the validity of the answer — these can hallucinate, they can give you the wrong information, you have to verify.

What’s Translate Tribune?

Flaugher: It’s my fully autonomous news aggregator. I’m stealing articles. It visits all these news sources — U.S., Turkey, Russia, Kenya, Israel, Iran, the People’s Daily in China, friends and foes — selects articles and translates them, and publishes a “newspaper” every day.

Here’s how it picks them for us: I told ChatGPT “You were a CIA analyst” and had it create a page of international news. Then I set up a finance page and told it, “You worked for Goldman Sachs, and you are translating for an American audience.”

It has every language. The Mistral AI platform from France is best at European languages. Claude3 is handling Asian languages, Farsi, Arabic, Swahili.

You focus on professional newsrooms, not random ‘citizen journalists’?

Flaugher: If you’re not paying for information, you’re getting propaganda. News is a premium product. You pay for news you trust.

But I’m not doing Translate Tribune for money; it’s proof of concept. The Wall Street Journal has a paywall, but it’s kind of irrelevant, I can get around it. I was paying $600 a year to the Wall Street Journal and New York Times. I canceled those. I do pay the [AI platforms] fees — about a dollar a day.

How can news organizations stay in business if you’re giving their product away?

Flaugher: I’m getting people to donate. Individuals starting podcasts find that if you get a loyal enough following, they’ll support you.

So the future of the news business looks a lot like Tucker Carlson. People will pay him to keep him interviewing people. Just like I keep subscribing to The Inquirer and the Economist. Donations are keeping news organizations around.

Devon: This all still has to shake out. OpenAI is being sued by the New York Times. I think it will be hard for the Times to say models can’t be trained on their data.

That’s how the internet works. Everyone is scraping everyone’s work. Would they pass a law that made that illegal? Google is paying Reddit to scrape their data. Maybe the Times will get Microsoft and Amazon and Google to pay them, or tie a product to AI.

Grusell: Clearly the [news organizations] want to encourage large tech corporations to subscribe. There will eventually be legislation. I wouldn’t hold my breath; the question of paying for source material has to percolate through the legal system for some time.

Do you worry about destroying people’s jobs?

Devon: You’ll always need humans in the loop to do this stuff. You might first need fewer. It’s cheaper than ever to start a company. Brad started one for like $20 the other weekend.

But then tech will need more jobs because it creates more needs and problems to solve. AI can help democratize people’s ability to solve problems they are passionate about and make money doing so.

We call [AI applications] “prompt engineering,” writing prompts with emotive, clear, precise language that gets the results you want. You don’t need to understand the math.

Malone: So we’re trying to elicit interest in AI by our members before they are displaced by it.

We have members who are freelancers. They make physical products and do graphic design. We need to embrace this and level up so they are not replaced by AI-generated images.

Generative AI lowers the barrier on who can use these tools. So less-technical people can do more with technology.