Elon Musk’s understanding of free speech on Twitter is flawed

I know what happens on platforms without content moderation. It's not pretty.

In October, I was home alone, in bed with the baby and still recovering from the cesarean. It hurt to walk, and I didn’t want to expose my newborn to COVID-19; I wasn’t answering the door, no matter how hard the maintenance guy knocked.

Then, my phone rang. It was my downstairs neighbor. The knocking wasn’t the maintenance man, she explained. It was the police.

I made my way to the door, painfully. Someone had called in to report a rape in my apartment, the officers told me.

This isn’t the first time my family has been “swatted,” a tactic that some use to call in a false report that draws police to my home. In the worst cases, swatting can be deadly.

Ever since I began writing about and organizing against the extreme far right in 2018, I’ve faced harassment from organized white supremacists. The threats and bullying may start on the internet, but they don’t stay there. Online harassment by Philadelphia Proud Boys quickly escalated to a vandalizing visit to my building in 2019 and an intimidation rally planned across the street from me in Clark Park in 2020.

It was when I started organizing to shut down the explicitly pro-terrorism Nazi forums known as Terrorgram that the harassment turned potentially deadly. Terrorgram exists on Telegram, a messaging platform known for its hands-off approach to moderation. Telegram’s laissez-faire environment allowed a universe of terroristic Nazi forums to proliferate, including channels specifically dedicated to doxxing (the release of personal information like addresses, phone numbers, and family member names) and harassment. Their targets included not only researchers like me, but also people whose only offense was being a member of a hated group. If you have a public profile and are trans, Jewish, Black, or a member of any of a host of other marginalized identities, you might very well end up in their crosshairs.

My work studying the dynamics of Telegram has taught me time and time again that moderation matters. On Telegram, Proud Boys eagerly shared my personal information, including addresses for myself and my family, with each other in their chats.

In other channels, Nazis photoshopped graphics of my head decapitated and on a meat hook, then shared these violent images along with my address and exhortations to deadly violence against me. Soon afterward, our neighbors warned us that strangers had been pounding at the building door. Later, someone swatted my parents, claiming to police that my father had a weapon and was planning to murder me. A SWAT team surrounded their home with guns drawn, ordering my terrified sister and mother out of the house and onto the lawn.

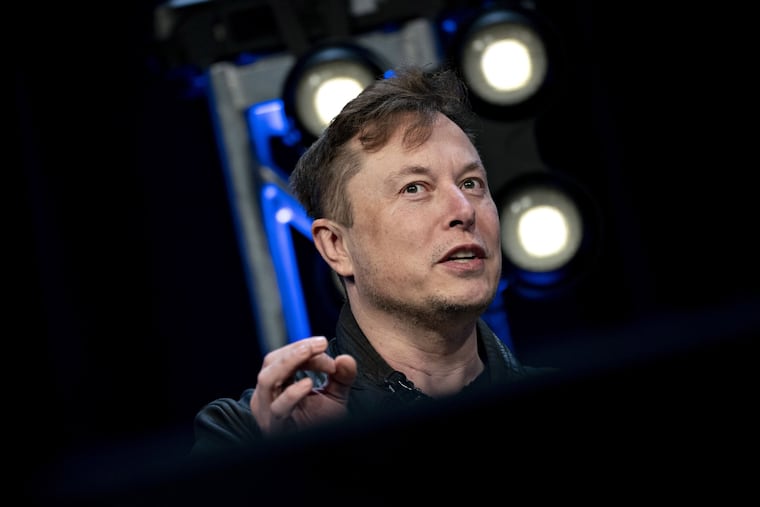

This is what I think about when I hear Elon Musk railing against Twitter’s supposed censorship. It’s what I consider when he proudly announces that he is a “free speech absolutist” and that this mind-set will inform his approach to Twitter, the platform he is set to purchase and control.

Musk’s understanding of free speech is fundamentally flawed. Freedom of speech under the First Amendment guarantees citizens the right to say what we wish, not the right to be platformed. Twitter is not obligated to allow its users to tweet the N-word or incite insurrection any more than Beyoncé is obligated to hand her microphone over to any random concertgoer who wants to duet. Free speech gives us the right to say what we want, but it also gives others the right to decide whom they do and don’t want to amplify.

Indeed, an anything-goes approach to speech tends to silence the marginalized even as it empowers the already-powerful. Platforms that forgo meaningful content moderation — Telegram and 8chan, for example — inevitably become spaces that allow the hateful to bully marginalized people into a fear-instilled silence. When online forums default to the sort of “free speech absolutism” Musk embraces, there is a chilling effect. Vulnerable users begin to self-censor to avoid harassment, knowing online hate can easily lead to offline violence.

“When online forums default to the sort of ‘free speech absolutism’ Musk embraces, there is a chilling effect.”

Twitter’s current moderation model is far from perfect. Its unnecessarily clunky automation frequently allows hateful content to stay up, while often erroneously flagging legitimate commentary as prohibited content. Musk’s “free speech” crusade, however, has nothing to do with protecting the vulnerable from censorship. If successful, his quest will only feed harassment, allowing toxic actors to overrun the website.

If Musk persists, Twitter will still be a censored platform. The censorship will simply come in the form of mass harassment and death threats aimed to silence those who already have the most difficult time getting heard.

Gwen Snyder is a Philadelphia-based researcher, organizer, and writer working to counter fascism and the far right. @gwensnyderPHL