Can driverless cars be safe? Grand Theft Auto helps Penn scientists find out

University of Pennsylvania scientists are using video games and toy cars to learn how autonomous vehicles think - and to determine whether they are safe for people to use.

A sporty black sedan speeds dangerously close to a cliff on a road winding through an arid landscape.

The car recovers and swerves back onto the cracked asphalt, but another sharp turn is coming. It straddles the edge of the cliff, its tires spinning through pale, sunburned sand. Then it falls. Sage brush and rock outcroppings blur past as it plummets.

No driver emerges from the car. No police show up. A virtual reality sun keeps beating down.

The crash occurred in a modified Grand Theft Auto video game, an example of the virtual simulations researchers at University of Pennsylvania are running to evaluate autonomous vehicles, a technology that in the coming years could transform the way Americans get around.

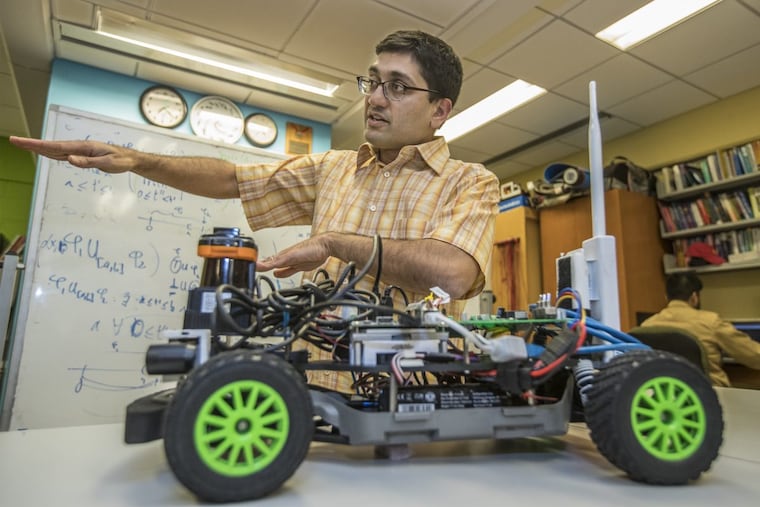

"We can crash as many cars as we want," said Rahul Mangharam, associate professor at University of Pennsylvania's Department of Electrical and Systems Engineering.

Mangharam and his team of six are pursuing what they describe as a "driver's license test" for self-driving cars, a rigorous use of mathematical diagnostics and simulated reality to determine the safety of autonomous vehicles before they ever hit the road.

Complicating that task is the nature of the computer intelligence at the heart of the car's operation. The computer is capable of learning, but instead of eyes, ears, and a nose, it perceives reality with laser sensors, cameras, and infrared. It does not see or process the world like a human brain. Working with this mystery that scientists call "the black box" is a daunting, even spooky, element of the work at Penn.

"They're not interpretable," Mangharam said. "We don't know why they reached a certain decision; we just know they reached a certain decision."

Clarity on how safe driverless vehicles can be is a critical step to maturing a technology many think will some day save thousands of lives.

Last year, 37,461 people died in vehicle crashes in the United States, the National Highway Traffic Safety Administration reported — enough to fill more than half of Lincoln Financial Field. About 94 percent of crashes happen because of human mistakes, NHTSA has found, and self-driving cars hold the promise of preventing many of those deaths.

The technology is not yet ready for prime time, most experts agree, and a premature introduction could result in deaths. Experts fervent in the belief that driverless cars eventually will be life savers fear fatal crashes caused by autonomous system failures could scare the public and delay adoption of the technology for years.

"They need to be very transparent in the development of this technology," said Leslie Richards, Pennsylvania's transportation secretary. "To get the public buy-in, people do need to understand."

In Pennsylvania, much attention related to autonomous cars is focused on Pittsburgh, where last year Uber began operating self-driving cars and Carnegie Mellon University has positioned itself as a leader in the field. But Penn, along with CMU, is a key player in Mobility21, a five-year, federally funded, $14 million program to explore transportation technology, including self-driving vehicles. While colleagues at CMU experiment with their own autonomous car, Penn's scientists work in a lab that looks like a very bright middle schooler's rec room.

White boards are covered in complex equations, but the shelves hold jury-rigged toy cars, and the computer screens display video games. It's all in service of rating robot drivers, how much variation in the environment and in the car itself the system can withstand without a failure. The researchers virtually drive cars in different weather and lighting — testing how well the software rolls with the changes it would face in the real world.

"You can never have 100 percent safety," Mangharam said. "You can design a system that would not be at fault intentionally."

Mangharam describes autonomous vehicles as continuously executing a three-step process. The first step is perception, the system's attempt to understand what is in the world around it. It should be able to spot a stop sign and other vehicles on the road. Then, data gathered is used to make a plan, which starts with the final destination, formulates a route, and then decides how to navigate that route. The car decides what speed, braking, and trajectory is needed to, for example, get around a slow-moving car on the highway while trying to reach an off-ramp. The third step is the process of driving, the application of brakes, gas, and steering to get where the vehicle is directed to go.

The Penn scientists run the autonomous driving software, called Computer Aided Design for Safe Autonomous Vehicles, through both mathematical diagnostics and the virtual reality test drives on Grand Theft Auto to see when the system fails. The video game is particularly useful because the autonomous driving system can be rigged to perceive it similarly to reality and because the virtual environment can be perfectly controlled by scientists.

The autonomous driver's inscrutable nature can be challenging, though. Deep neural networks teach themselves how to identify objects through a process of trial and error as they are fed thousands of images of people, trees, intersections — anything a car may encounter on the road. It becomes increasingly accurate the more examples it is fed, but humans cannot know what commonalities and features a machine is fixating on when it perceives a tree, for example, and correctly labels it as such. They are almost certainly not the features a human uses — a trunk, leaves, the texture of bark — to distinguish between a tree and a telephone pole.

Because of the uncertainty about how the robot driver is identifying objects, researchers are concerned it might be coming to the right answer, but for the wrong reasons.

Mangharam used the example of a tilted stop sign. Under normal circumstances, the computer could recognize a stop sign correctly every time. However, if the sign were askew, that could throw off the features the computer uses to recognize it and a car could drive right past it. Scientists need to understand not just what the car does wrong, but at what stage of the driving process the error happens.

"Was the cause of the problem that it cannot perceive the world correctly and made a bad decision," Mangharam explained, "or did it perceive the world correctly and make a bad decision?"

While talk of errors and failures invokes visions of flaming wrecks and cars careening off bridges, what is perhaps more likely is paralysis.

"The idea of plunking a fully autonomous car down in New York City or downtown Philly, it is very likely that given the current state of technology and the very, very conservative nature that an autonomous vehicle is going to take because of that liability … I would hearken to guess that car is never going to move," said Greg Brannon, director of automotive engineering for AAA. "It's going to look for a break in traffic that's never going to exist."

AAA is already doing safety testing on partially autonomous systems such as adaptive cruise control, lane keeping, emergency braking. Some scientists think autonomy will happen abruptly: One company will perfect a product that is born able to handle anything it might encounter on the open road. Brannon, though, sees autonomous systems being introduced gradually, so people would be less likely to see the concept as alien as today's drivers do.

"People will experience things in bits and pieces," he said, "and it will breed trust in these systems."

Pennsylvania has passed legislation governing the testing of autonomous vehicles on the state's roads, and Richards said she and her counterparts in other states frequently talk about what kind of regulatory framework might be needed as full autonomy becomes closer to a reality. A driver's license test, as Mangharam proposes, is one possibility, though she said it would likely require cooperation between states and the federal government to decide on safety standards. The standard would have to consider that at least initially, she said, robot drivers would likely be sharing the road with many humans behind the wheel.

"We all know that any incidents of hazards tied to autonomous or collective vehicles will set everybody back," said Richards, who is convinced the technology will ultimately save lives. "We really want to proceed as cautiously as possible to maintain this positive moment."

A common question about driver-less vehicles is how soon the general public will start using them. As much as he believes in autonomous technology, Mangharam is worried by a tendency in our society to leave regulatory oversight in the dust as we embrace a new toy.

"I don't think we should be focusing on a date," he said, "until we reach some safety threshold."